Enhancing Motor Imagery based Brain Computer Interfaces for Stroke Rehabilitation

Saher Soni, Shivam Chaudhary, and Krishna Prasad Miyapuram

In Proceedings of the 7th Joint International Conference on Data Science & Management of Data (11th ACM IKDD CODS and 29th COMAD), 2024

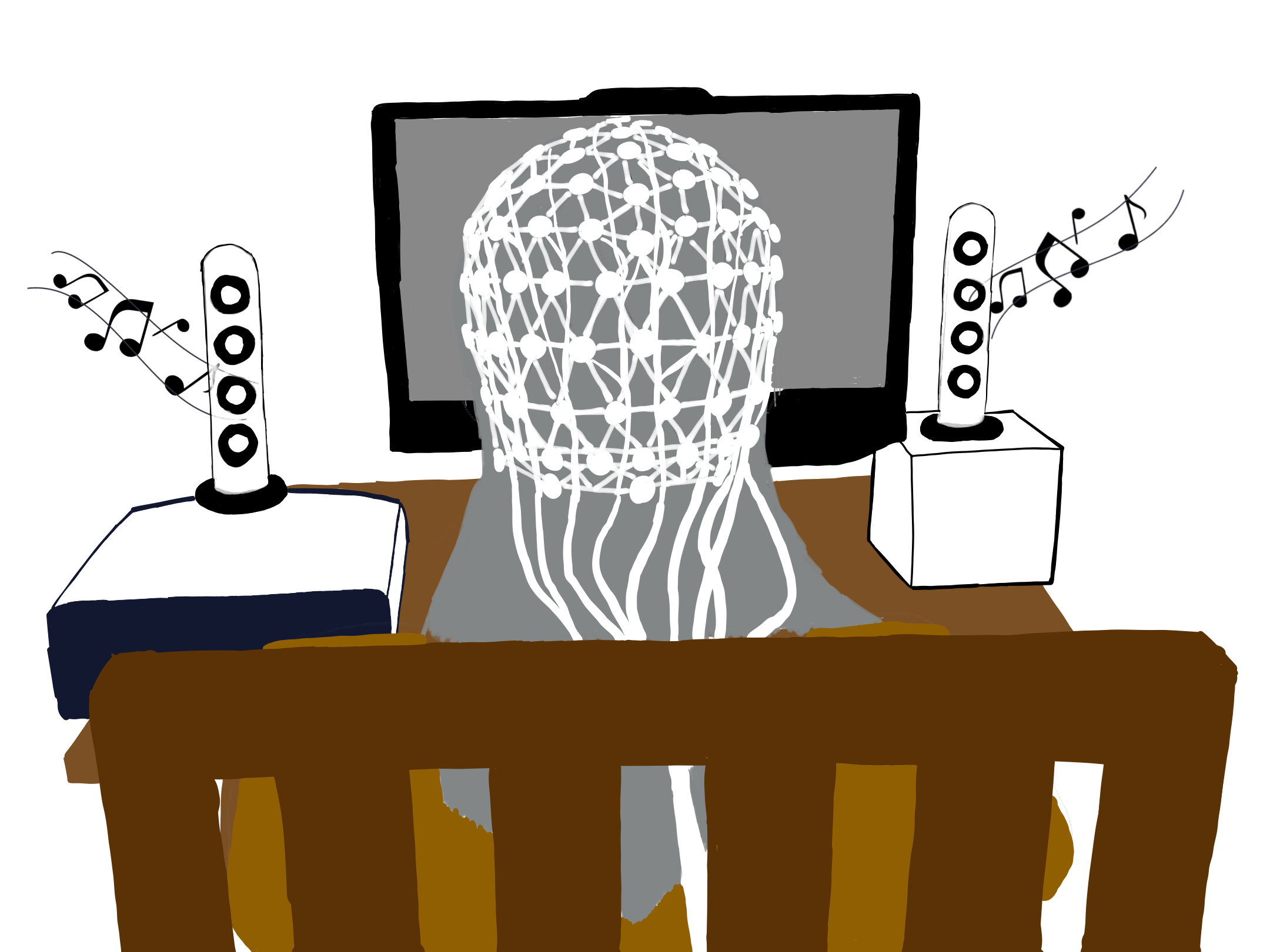

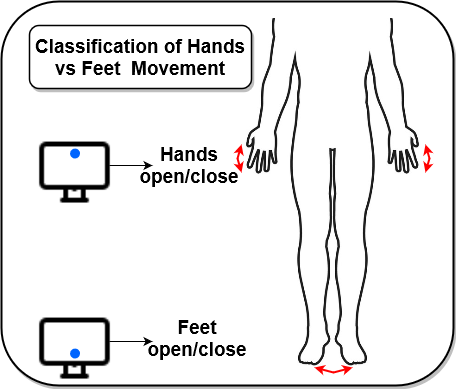

Globally, the prevalence of disabilities among stroke survivors exceeds 80%, with upper-limb movement impairments affecting over 85% of individuals. To address this challenge, motor imagery (MI) based brain-computer interface (BCI) has emerged as a promising approach for translating the imagined motor intentions of individuals into control signals for external devices. Electroencephalography (EEG) signals are commonly used in MI-BCIs due to their non-invasiveness, portability, high temporal resolution, and affordability. The present study utilized the publicly available Electroencephalography Motor Movement/Imagery Dataset (EEGMMIDB), comprising 64-channel EEG recordings from 109 participants sampled at 160 Hz. The aim was to classify between the opening/closing of palms and feet using the Long Short Term Memory (LSTM) network directly on cleaned EEG signals, bypassing traditional feature extraction methods that are computationally intensive and time-consuming. We achieved an average classification accuracy of 71.2% across subjects by tuning the hyperparameters related to epochs and segment length. This research emphasizes the efficacy of deep learning approaches in generating robust control signals for predicting motor intentions using EEG signals, eliminating the necessity of laborious feature extraction methods. By leveraging deep learning models, MI-BCI devices can advance neuro-rehabilitation, especially in stroke, by providing motor assistance, enabling patients to execute movements solely through the power of imagination.